Right now I updated my server, and of course sabnzbd was updated as well from 0.4.x.x something to 0.5.6.

I loaded a couple of nzbs from binsearch (roughly around 40GB in data) and to my suprise 20 minutes later all of my downloads had failed due to "memory allocation".

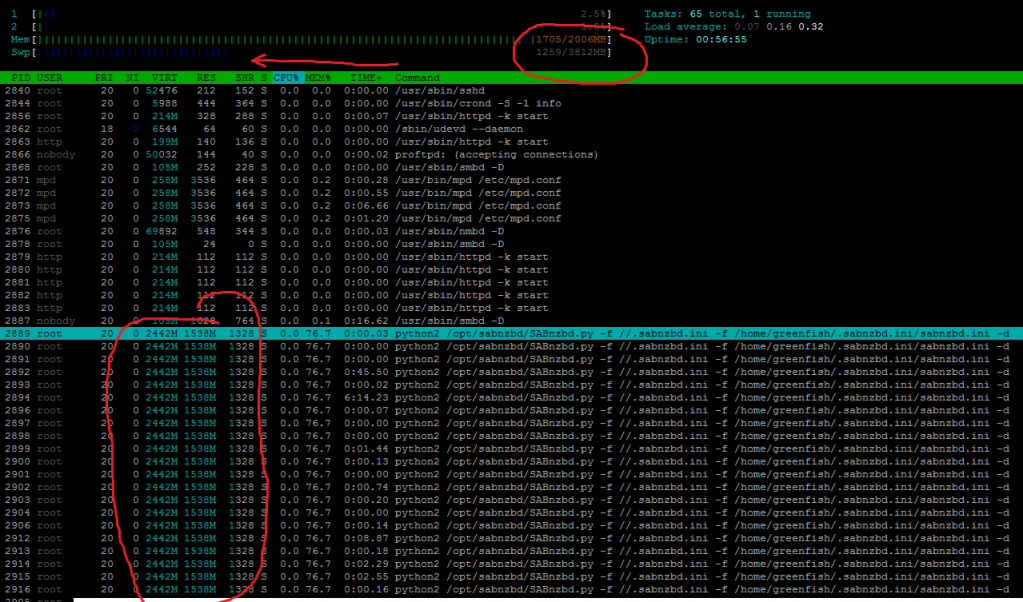

Going into my server a quick "free -m and top" showed me a huge increase in memory allocation. sabnzbd is trying to use up all of my ram and when it fails all of my downloads dies of course.

I only have 2GB of ram but that's never been an issue before. To my little knowledge I belive sabnzbd used like less than 400mb on my system on older versions. Also my system rarely if ever had to resort to using swap at least not 40% of my swap memory.

A quick scooping around on this forum it seems like i'm the only one so far with this problem.

I'll be back with a debug log when my server is rebooted after a new kernel upgrade.

Version: (0.5.6)

OS: (archlinux)

Install-type: (linux source)

Skin (if applicable): (Default)

Firewall Software: (none)

Are you using IPV6? (no)

Is the issue reproducible? (yes - everytime I start the daemon)

My System:

Amd Phenom X2 550+

2GB RAM

archlinux kernel 2.6.36

python2 2.7.1-3

sabnzbd log http://paste.pocoo.org/show/312846/

sabnzbd python log http://paste.pocoo.org/show/312844/

sabnzbd error log empty

Showing "top" screenshot:

EDIT There's something very wrong, i'm getting failed on all of my downloads "Download failed - Out of your server's retention?" <---- i'm on giganews, they have over 800 days in retention the file is less than 16 days old.